Merge branch 'port-2021-05-02'

Showing

.circleci/config.yml

0 → 100644

.github/config.yml

0 → 100644

.gitignore

0 → 100644

.gx/lastpubver

0 → 100644

LICENSE

0 → 100644

Makefile

0 → 100644

benchmarks_test.go

0 → 100644

This diff is collapsed.

bitswap.go

0 → 100644

bitswap_test.go

0 → 100644

This diff is collapsed.

decision/decision.go

0 → 100644

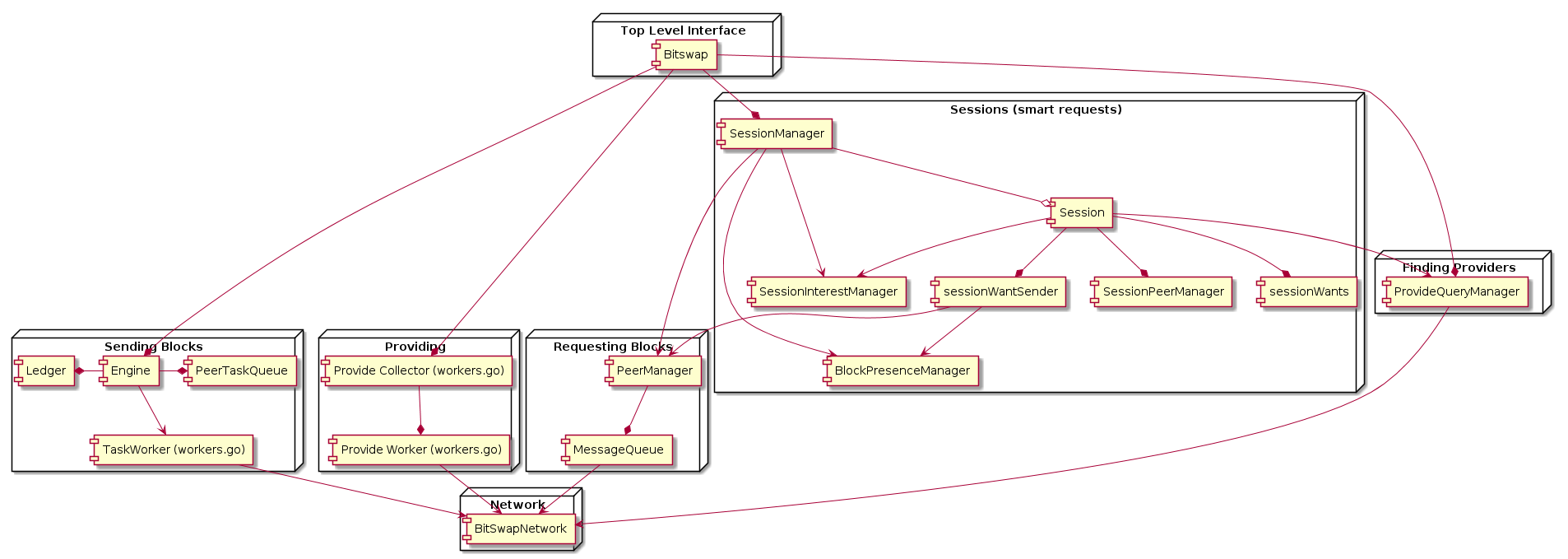

docs/go-bitswap.png

0 → 100644

80 KB

docs/go-bitswap.puml

0 → 100644

docs/how-bitswap-works.md

0 → 100644

go.mod

0 → 100644

go.sum

0 → 100644

This diff is collapsed.

internal/decision/engine.go

0 → 100644

This diff is collapsed.

This diff is collapsed.

internal/decision/ewma.go

0 → 100644

internal/decision/ledger.go

0 → 100644

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

internal/getter/getter.go

0 → 100644

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

internal/session/cidqueue.go

0 → 100644

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

internal/session/session.go

0 → 100644

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

message/message.go

0 → 100644

This diff is collapsed.

message/message_test.go

0 → 100644

This diff is collapsed.

message/pb/Makefile

0 → 100644

message/pb/cid.go

0 → 100644

This diff is collapsed.

message/pb/cid_test.go

0 → 100644

This diff is collapsed.

message/pb/message.pb.go

0 → 100644

This diff is collapsed.

message/pb/message.proto

0 → 100644

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

network/dms3_impl.go

0 → 100644

This diff is collapsed.

network/dms3_impl_test.go

0 → 100644

This diff is collapsed.

network/interface.go

0 → 100644

This diff is collapsed.

network/options.go

0 → 100644

This diff is collapsed.

stat.go

0 → 100644

This diff is collapsed.

testinstance/testinstance.go

0 → 100644

This diff is collapsed.

testnet/interface.go

0 → 100644

This diff is collapsed.

This diff is collapsed.

This diff is collapsed.

testnet/network_test.go

0 → 100644

This diff is collapsed.

testnet/peernet.go

0 → 100644

This diff is collapsed.

This diff is collapsed.

testnet/virtual.go

0 → 100644

This diff is collapsed.

wantlist/wantlist.go

0 → 100644

This diff is collapsed.

wantlist/wantlist_test.go

0 → 100644

This diff is collapsed.

wiretap.go

0 → 100644

This diff is collapsed.

workers.go

0 → 100644

This diff is collapsed.